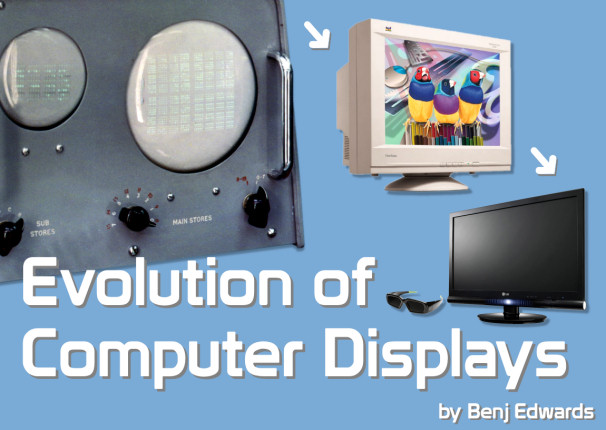

[ VC&G Anthology ] The Evolution of Computer Displays

September 17th, 2019 by Benj EdwardsTake a good look at this sentence. You’re reading it thanks to the magic of a computer display — whether it be LCD, CRT, or even printed out on paper. Since the beginning of the digital era, users have needed a way to view the results of programs run on a computer — but the manner in which computers have spit out data has changed considerably over the last 70 years. Let’s take a tour.

Get ready for a classic! This slideshow article was originally published on PCWorld.com on November 1, 2010 under the title “A Brief History of Computer Displays.”

Get ready for a classic! This slideshow article was originally published on PCWorld.com on November 1, 2010 under the title “A Brief History of Computer Displays.”

Since the PCWorld version has broken images and is in jeopardy of disappearing (and I retained the rights to the piece), I decided to republish it here for historical reference. It’s still a great read! — Benj

—

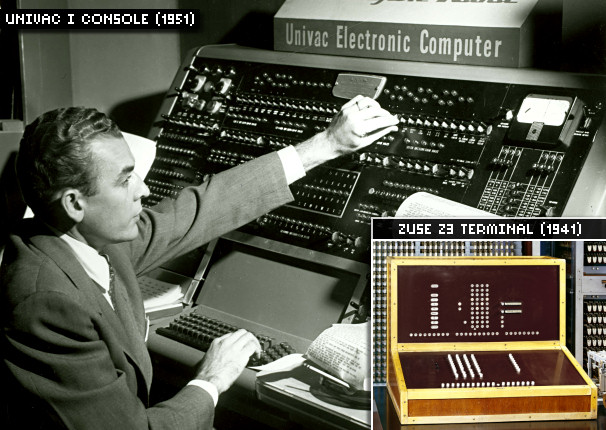

Blinking Indicator Lights

While almost every early computer provided some sort of hard copy print-out, the earliest days of digital computer displays were dominated by rows of blinking indicator lights — tiny light bulbs that flashed on and off when certain instructions were processed or memory locations were accessed. This allowed operators to get a window on what was going on inside the machine at any given moment, though the usefulness of reading results through flashing lamps was limited.

Photos: Computer History Museum, Deutsches Museum

—

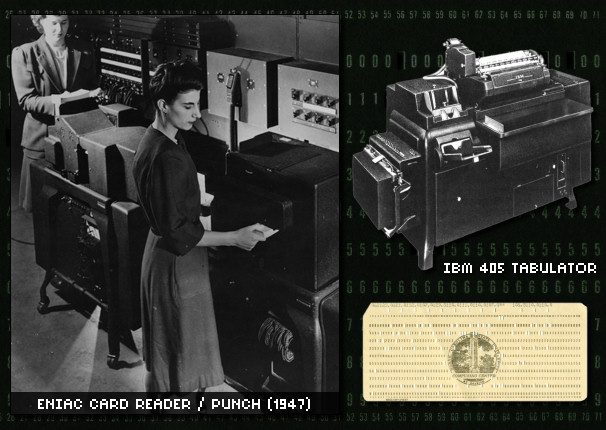

Punch Cards In, Punch Cards Out

The ENIAC, among other early electronic computers, used Hollerith punched cards as both input and output. To write a program, an operator typed on a typewriter-like machine that encoded the instructions into a pattern of holes punched into a paper card. They then dropped the stack of cards in the computer, and the computer read and ran the program. For output, the computer punched encoded results onto blank punch cards, which then had to be decoded with a device like the IBM 405 tabulator, seen here, which tallied and printed card values onto sheets of paper that humans could read directly.

Photos: Computer History Museum, IBM, Benj Edwards

—

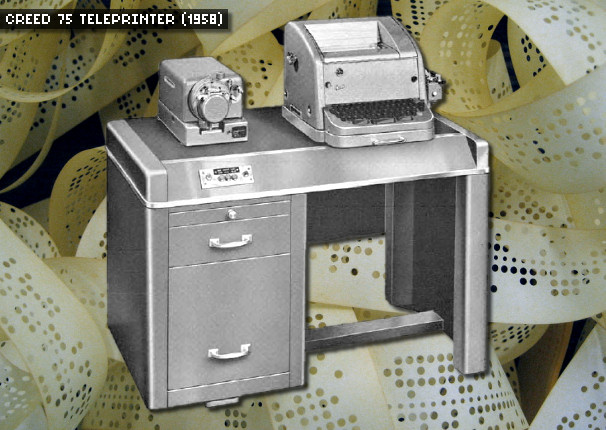

Decoding Paper Tape

As an alternative to punched cards, many early computers used long rolls of paper tape punched in a pattern that represented a computer program. Many of those same computers also punched the program results onto the same type of paper tape. An operator then ran the tape through a machine like the one seen here, and the electric typewriter automatically typed the computer output in human-readable form (numerals and letters) onto larger rolls of paper.

Photos: Ed Bilodeau, Creed

—

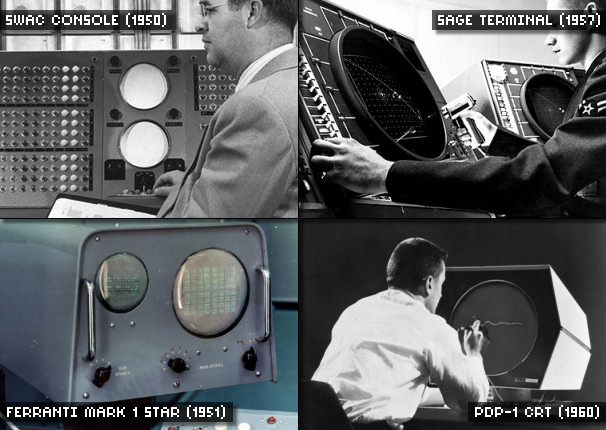

Early CRT Displays

The first cathode ray tubes (CRTs) appeared in computers as a form of memory, not as displays (see Williams tubes). It wasn’t long before someone realized they could use even more CRTs to show the contents of that CRT-based memory (as seen in the two computers on the left). Later, designers adapted radar and oscilloscope CRTs to use as primitive monochrome displays, like those in the SAGE system (stroke-drawing vector) and the PDP-1 (point-plot).

Photos (clockwise from top): Computer History Museum, MITRE, DEC, Onno Zweers

—

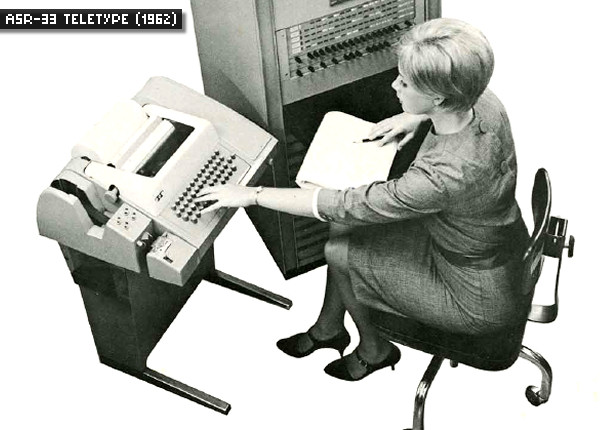

The Teletype Era

Prior to the invention of the electronic computer, people had been using teletypes to communicate over telegraph lines since 1902. A teletype is an electric typewriter that communicates with another teletype over wires (or later, over the radio) using a special code. By the 1950s, engineers were hooking teletypes directly up to computers to use as display devices. The teletypes provided a continuous printed output of a computer session. They remained the least expensive way to interface with computers until the mid-1970s.

Photo: Systems Engineering Laboratories

—

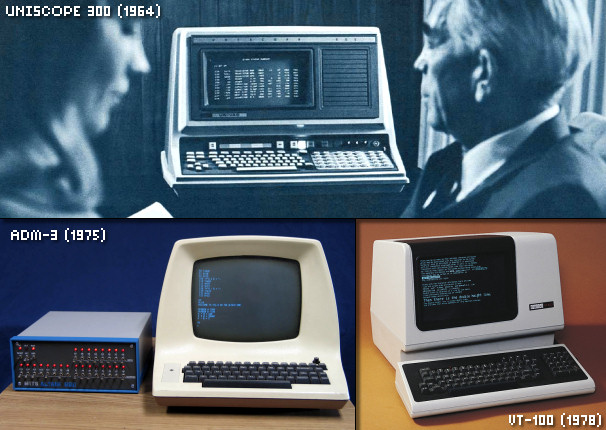

The Glass Teletype

Some time in the early 1960s, computer engineers realized they could use CRTs as virtual paper in a virtual teletype (hence the term “glass teletype,” an early name for such terminals). Video displays proved far faster and more flexible than paper; such terminals became the dominant method for interfacing with computers in the early-mid 1970s. The devices hooked to computers through a cable that commonly only transmitted code for text characters — usually no graphics, although some had graphical capabilities thanks to special text-based protocols. Few CRT terminals supported color until the 1980s.

Photos: UNIVAC, Grant Stockly, DEC

—

Composite Video Out

Teletypes (even paper ones) cost a fortune in 1974 — far out of reach of the individual in the do-it-yourself early PC days. Seeking cheaper alternatives, three folks (Don Lancaster, Lee Felsenstein, and Steve Wozniak) hit upon the same idea at the same time: Why not build a cheap terminal device using an inexpensive CCTV video monitor as a display? It wasn’t long before both Wozniak and Felsenstein built such video terminals directly into computers themselves (the Apple I and the Sol-20 respectively), creating the first computers with factory video outputs in 1976.

Photos: Steven Stengel, Michael Holley

—

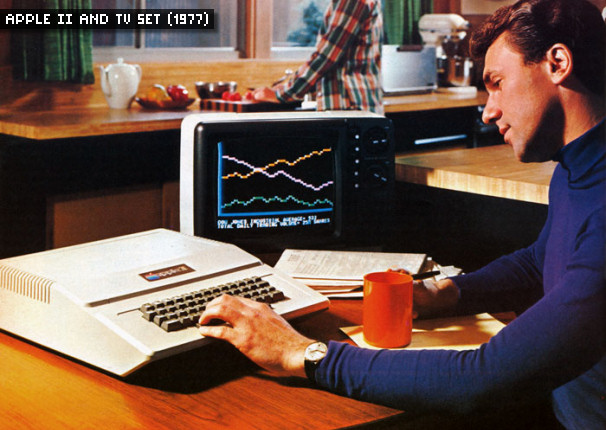

A Monitor Already in Every Home – TV Displays

With video outputs came the ability to use ordinary television sets as computer monitors. Enterprising businessmen manufactured RF (radio frequency) modulator boxes for the Apple II that converted composite video into a signal that simulated an over-the-air broadcast — something a TV set could understand.

The Atari 800 (1979), like video game consoles of the time, included an RF modulator in the computer itself, and others followed. However, bandwidth constraints and the coarse dot pitch of consumer TV sets limited the useful output to low resolutions, so “serious” computers eschewed TVs for dedicated monitors.

Photo: Apple, Inc.

—

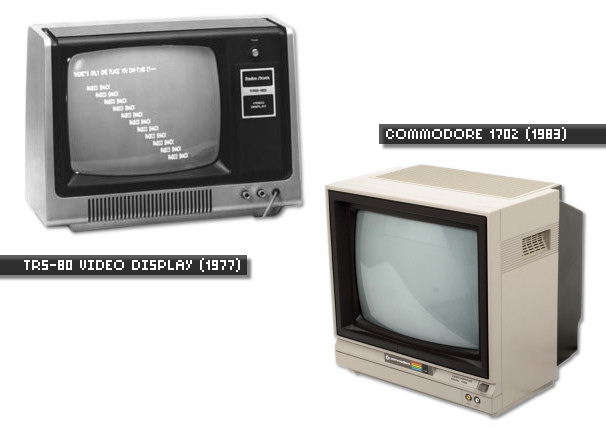

More Composite Monitors

In addition to RF television output, many early home PCs supported composite video monitors (seen here) for a higher quality image. (The Commodore 1702 also offered an alternative, higher-quality display through an early S-Video-like connection.)

As the PC revolution came into full swing, computer makers (i.e. Apple, Commodore, Radio Shack, TI) began to design and brand video monitors — both monochrome and color — especially for their personal computer systems. Most of those monitors were completely interchangeable between systems.

Photos: Radio Shack, Shane Doucette

—

Early Plasma Displays

In the 1960s, an alternative display technology emerged that used a charged gas trapped between two glass plates. When a charge was applied across the sheets in certain locations, a glowing pattern emerged. One of the earliest computer devices to use a plasma display was the PLATO IV terminal. Later, companies like IBM and GRiD experimented with the relatively thin, lightweight displays in portable computers. The technology never took off for PCs, but it surfaced again years later in flat panel TV sets.

Photos: Simon Bisson, Corestore, Steven Stengel

—

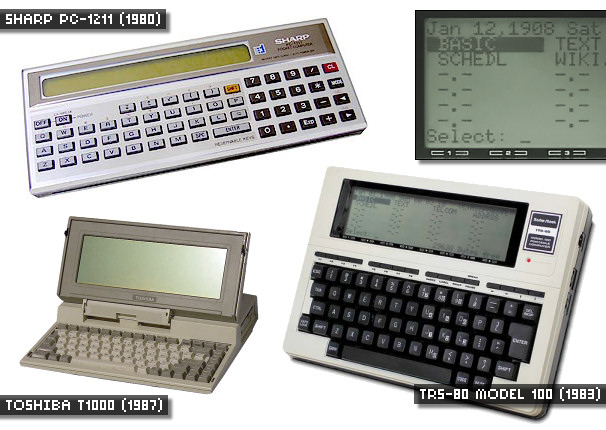

The Early LCD Era

Yet another alternative display technology arrived on the scene in the 1960s– the liquid crystal display (LCD) — which first made its commercial debut in pocket calculators and wristwatches in the 1970s. Early portable computers of the 1980s perfectly utilized LCDs, which were extremely energy-efficient, lightweight, and thin displays compared to the alternatives. These early LCDs were monochrome only, low contrast, and they required a separate backlight or direct illumination to read them properly.

Photos: pc-museum.com, old-computers.com, Steven Stengel

—

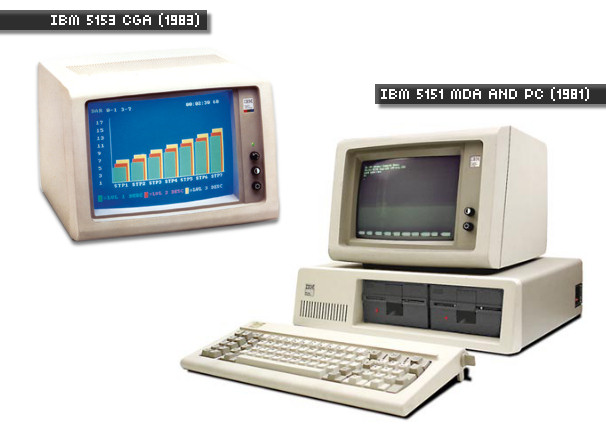

Early IBM PC Displays

The IBM PC (1981) shipped with a directly attached monochrome video display standard, MDA, that rivaled a video terminal in sharpness. For color graphics, IBM designed the CGA adapter, which hooked to a composite video monitor or the IBM 5153 display (which used a special TTL RGB connection). In 1984, IBM introduced EGA, which brought with it higher resolutions, more colors, and of course, new monitors. Various third-party IBM PC video standards competed with these in the 1980s; none won out like IBM’s did.

Photos: IBM, Steven Stengel (R)

—

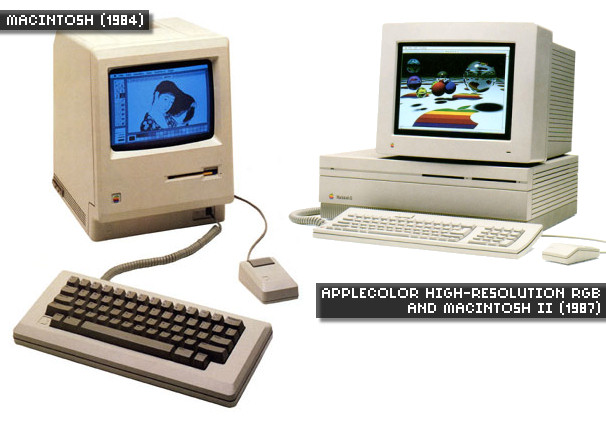

Macintosh Displays

The first Macintosh (1987) included a 9″ monochrome monitor that crisply rendered the Mac’s 512×342 bitmapped graphics in either black or white (no shades of gray here). It wasn’t until the Macintosh II (1987) that the Mac line officially supported both color video and external monitors. The Mac II video standard was similar to VGA, which we’ll learn about later. Mac monitors continued to evolve with the times, all the while being widely known for their sharpness and accurate color representation.

Photos: Apple, Inc.

—

RGB to the Rescue

The 1980s saw the launch of competitors to both the Macintosh and IBM PC that prided themselves on sharp, high-resolution, color graphics. The Atari ST series and the Commodore Amiga series both included proprietary analog monochrome and RGB monitors that allowed users of those systems to enjoy their computer’s graphics to its fullest.

Photos: Bill Bertram (L), Steven Stengel (R)

—

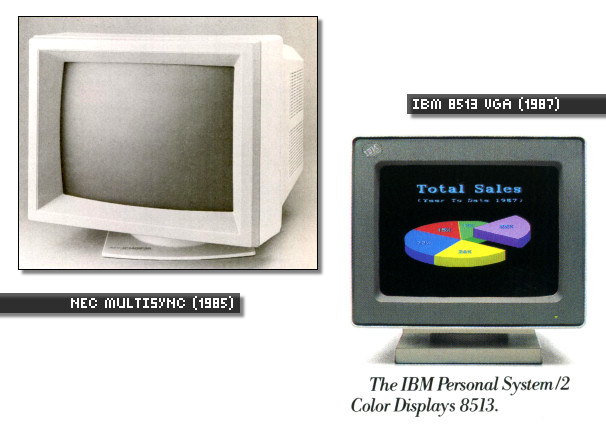

Two Important Innovations: Multisync and VGA

In the early days of the IBM PC, one needed a different monitor for each display scheme, whether it be MDA, CGA, EGA, or others. To solve this, NEC invented the first multisync monitor (called “MultiSync”), which dynamically supported a range of resolutions, scan frequencies, and refresh rates all in one box. That capability soon became standard in the industry.

In 1987, IBM introduced the VGA video standard and the first VGA monitors in league with IBM’s PS/2 line of computers. Almost every analog video standard since then has built off of VGA (and its familiar 15-pin connector).

Photos: NEC, IBM

—

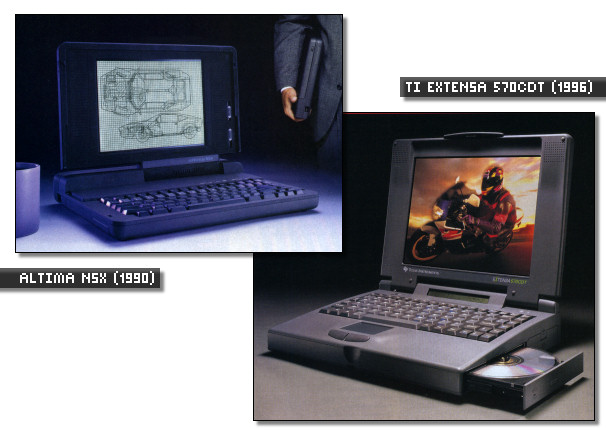

Laptop LCDs Improve

When we last saw LCDs, they were low-contrast monochrome affairs with slow refresh rates. Throughout the 1980s and 1990s, LCD technology continued to improve, driven by a market boom in notebook computers. The displays gained more contrast, better viewing angles, advanced color capabilities, and began to regularly ship with backlights for night viewing. The LCD would soon be poised to jump from the portable sector into the even more fertile grounds of the desktop PC.

Photos: Altima, Texas-Instruments

—

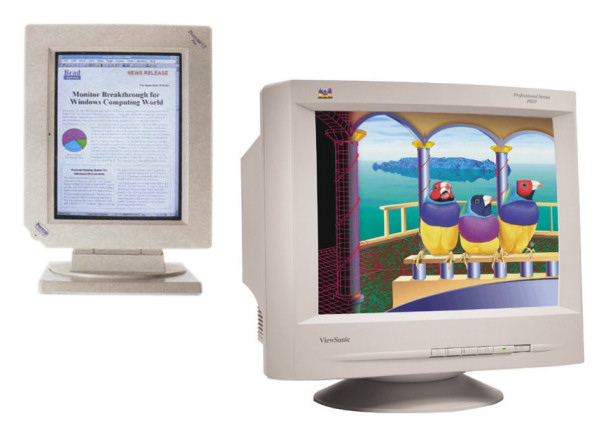

The Beige Box Era

It’s the mid-1990s in PC and Mac land, and just about all monitors are beige. We’re now firmly in the era of the inexpensive color multisync VGA monitor that can handle a huge range of resolutions with aplomb. Manufacturers at this time began experimenting with a wide range of physical sizes (from 14″ to 21″ and beyond) and shapes (the 4:3 ratio or the vertically-oriented full page display). Some CRTs even became flat in the late 1990s while envious of the new, more svelte LCD kid on the block.

Photos: Radius, ViewSonic

—

Early Desktop LCDs

Computer companies had experimented with desktop LCD monitors since the 1980s in small numbers, but those monitors tended to be very expensive and have low performance verses CRTs (lack of color, low contrast, low refresh rate, low resolution).

That changed around 1997, when suddenly a number of vendors like Dell (left), IBM (center), and Apple (right) introduced color LCD monitors with qualities that could finally begin to compete with CRT monitors for a reasonable price. These LCDs used less desk space, less electricity, and generated far less heat than CRTs, which made them attractive to early adopters.

Photos: ViewSonic; IBM; Apple, Inc.

—

Present and Future

Today, LCD monitors (many widescreen) are standard across the PC industry (excepting tiny niche applications). Desktop LCD monitors first outsold CRT monitors in 2007, and their sales and market share continue to climb. Recently, LCD monitors have become so inexpensive that many experiment with dual monitor setups like the one seen here. A recent industry trend is toward monitors that support 3D through special glasses and ultra-high refresh rates. Monitors commonly hook to computers with pure digital connections such as DVI and HDMI.

As most TV sets have become fully digital, the line between monitor and TV are beginning to blur like they did in the early 1980s. It’s now possible to buy a 42″ HD flat-panel display for under $999 that you can hook to your computer, which would make anyone’s head explode if you could convey that to someone in the 1940s — back when people were still using paper.

Photos: Samsung, GO.VIDEO, Asus

—

Original URL: http://www.pcworld.com/article/209224/a_brief_history_of_computer_displays.html

September 17th, 2019 at 1:11 pm

I remember working at Tiger Direct in the late 90s and a guy from Viewsonic came in and offered us employee pricing on LCD computer monitors. For only $900 I could get a 13″ 4:3 LCD monitor, which at the time was pretty good (I didn’t buy it).

September 22nd, 2019 at 4:53 am

These days, I am slow to upgrade my hardwares since I just keep using stuff that still work until there are problems and deaths. I finally went LED 1080p HDTV and computer monitor in late 2014.

September 25th, 2019 at 8:07 pm

Awhile back I discovered Youtube and archive.org has older episodes of Computer Chronicles, and on one episode from the mid 80’s a representative from an LCD manufacture was explaining the process of making larger LCD panels. At the time, all there was was thin LCD strips, and the only way to get over this limitation was to join several smaller panels, plus add the circuitry to make them viewable as one big screen. He went on saying color LCD’s were right around the corner, and maybe in a decade we might see the beginning of large screen LCD TV’s. The other guest laughed at the idea.

October 6th, 2019 at 4:24 am

Tim, Computer Chronicles series was rad. Did you watch these MattChat interview parts from a few years ago?

https://www.youtube.com/watch?v=mBvT3B3sB_U

https://www.youtube.com/watch?v=OLHPBbszUpo

https://www.youtube.com/watch?v=BBORqsMkFpY